Board Playbook: EU AI Act Deadlines You Can’t Miss

- Boards are redefining accountability as AI shifts from experimentation to regulated execution, making oversight of systems, data, and compliance a leadership duty, not a technical one.

- Deadlines now set the governance agenda, with phased EU AI Act compliance dates requiring documentation, reporting, and proof of vendor discipline by specific quarters.

- Value and compliance converge through structured AI governance frameworks that combine transparency, testing, and incident response standards tied to measurable board KPIs.

- Board readiness is assessed through control, evidence, and timing, judged by how well directors can demonstrate awareness, assign ownership, and verify actions before each EU AI Act deadline.

The European Union’s AI Act, the world’s first binding regulation governing artificial intelligence, is already in force, placing explicit obligations on providers and users of AI systems. It marks a turning point: AI has moved beyond voluntary ethics codes and guiding principles into an era of hard deadlines, penalties, and regulatory oversight. While the Act took effect in August 2024, its first binding provisions were applied in February 2025, with general-purpose AI (GPAI) obligations following in August 2025. For boards of directors and senior executives, this period signals a shift from policy awareness to demonstrable governance readiness. The window to show accountability — through robust frameworks, vendor oversight, and verifiable control — is narrowing ahead of the high-risk AI compliance phases in 2026 and 2027. In corporate settings, the AI Act raises questions of accountability, risk exposure, and timing. Directors must understand their roles, intervene early, and track key deadlines. Missed compliance windows may lead not only to legal fines but severe reputational damage in a market that increasingly demands trust in AI.

This blog serves as a Board playbook, a precise guide on what matters, by when, and what actions must be visible in board minutes before each milestone. It aims to translate the EU AI Act deadlines into actionable decisions directors must take today.

Why the EU AI Act Matters for Boards

The EU AI Act places boards squarely in scope for AI oversight and EU AI Act compliance. Directors are expected to know where AI is used, how systems are classified, and whether controls match the law’s standard. EU AI Act deadlines convert these expectations into time-bound duties. For companies active in or serving the EU market, AI regulation in Europe now shapes governance, capital allocation, and disclosure. Delegation to management without visible oversight places the board in a weak position in this environment.

Accountability at the Top

Boards approve the risk posture, direct resources, and set expectations for audit-ready evidence. Committee charters should reference AI oversight, and minutes should reflect specific questions on risk, status, and next steps.

From Policy to Evidence

Written policies are not enough. Directors should ask for a current inventory of AI use cases, the risk category for each, and proof of controls for high-risk AI systems.

Capital Markets and Reputation

Non-compliance can trigger fines, product restrictions, and loss of trust. Investors and regulators treat weak AI controls as a signal of broader governance gaps. Boards that demand clear reporting, defined owners, and dated action plans protect valuation and license to operate.

Key Compliance Timelines at a Glance

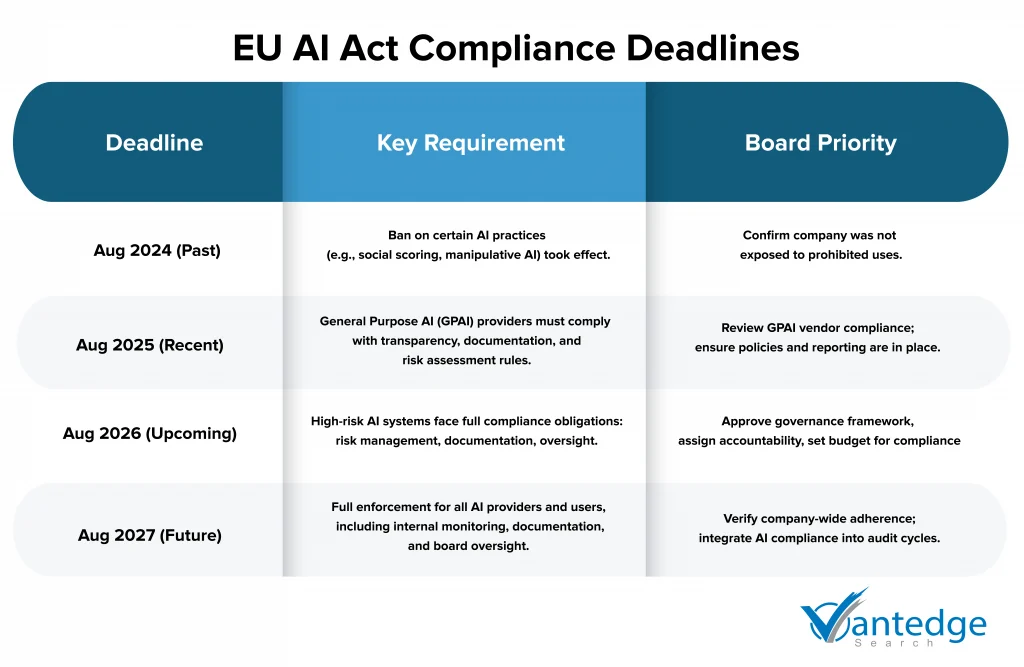

The EU AI Act deadlines define the operational calendar for compliance over the next two years. For boards, these milestones dictate when oversight actions must be documented and when systems, vendors, and governance frameworks must align with the regulation. The first two deadlines have already taken effect, while the next phases require close board supervision to avoid exposure.

How the Timeline is Structured

The Act’s provisions apply in progressive stages, beginning with outright prohibitions and moving toward detailed compliance obligations for general-purpose and high-risk AI systems. Each stage introduces additional verification and documentation requirements that build upon one another. Boards should confirm that internal programs and reporting cycles correspond to these specific milestones.

Implications for Board Oversight

At this stage, the focus for directors should be on converting these EU AI Act deadlines into structured oversight actions. The following priorities outline what the board should already be reviewing and what must appear in management updates going forward:

- Confirm GPAI-related documentation and vendor attestations are complete and accessible.

- Direct management to finalize the AI governance framework and readiness plan for high-risk AI systems.

- Embed compliance tracking into regular audit and risk-reporting cycles.

These milestones function as fixed governance checkpoints. Each board meeting through 2027 should include a brief, dated compliance update tied to these commitments.

High-Priority Actions for Boards

As far as actions are concerned, the focus should shift from understanding the regulation to demonstrating readiness. The next stage of EU AI Act compliance depends on visible governance structures, current policies, and verifiable evidence of control. Boards should request precise, written updates from management on progress against these priorities rather than broad assurances.

Governance and Accountability

Establishing ownership is the board’s first responsibility. A senior executive, such as the Chief Compliance Officer or another designated leader, should be accountable for AI oversight. The board or its risk committee must include AI compliance within its formal mandate. Meeting minutes should capture decisions, follow-ups, and completion dates to demonstrate traceable supervision.

Policy and Procurement

Every organization using AI, whether internally or through vendors, must update its policy framework. Procurement teams should include clauses that require suppliers to comply with the EU AI Act, provide documentation on model use, and disclose subcontractors. These measures protect the board against liability that may arise from third-party systems.

Risk Assessment

Boards should require a full inventory of AI systems currently in operation or development. Each system must be classified according to its risk level under the regulation. High-risk AI systems need detailed technical documentation and testing records. A risk register should accompany every board report through the 2026 compliance phase.

Vendor and GPAI Compliance

General-purpose AI systems used in operations or sourced through vendors must now meet transparency and documentation obligations. Boards should confirm that contracts include warranties covering EU AI Act compliance, and that attestations are available upon request. Where providers cannot supply this evidence, management must present an alternative plan or an exit strategy.

Board Training

Directors should receive periodic briefings on the current regulatory status. A concise annual session, conducted by counsel or an external expert, should cover updates, audit requirements, and forthcoming deadlines. This training supports informed oversight and provides evidence of diligence in the event of regulatory review.

Reporting and Monitoring

Management should include an AI compliance dashboard in quarterly board packs. It should display status, open issues, vendor dependencies, and upcoming EU AI Act deadlines. This allows directors to verify that commitments are being met and that corrective measures are progressing.

Effective oversight at this stage signals disciplined governance to regulators, investors, and employees. Boards that act on these priorities reduce legal exposure and strengthen organizational accountability ahead of the next enforcement phase.

Strengthen AI leadership today with Vantedge Search.

Building a Governance and Risk Framework by 2026

The next milestone under the EU AI Act focuses on high-risk AI systems. Compliance by 2026 will depend on how well organizations have embedded structured oversight into their operating model. Boards must move beyond policies and statements of intent to measurable governance frameworks that link accountability, documentation, and continuous monitoring.

Governance Structures

A board-approved governance structure is the foundation of compliance. It should specify who owns AI risk, how reporting flows between management and the board, and what authority committees hold to approve or pause AI initiatives. This framework must align with the company’s existing control systems so that AI compliance becomes part of the same rhythm as financial, audit, and operational reviews.

Integration with Enterprise Risk Management

AI compliance cannot exist in isolation. The AI Act treats risk management as a recurring obligation that covers data quality, model accuracy, bias detection, and security. These areas should be captured within the broader enterprise risk management framework, allowing the board to monitor exposure across functions. Each high-risk AI system should have an assigned risk owner, an updated assessment record, and testing evidence reviewed at least annually.

Documentation and Record Keeping

High-risk AI systems require detailed technical documentation, including system purpose, design, data sources, validation methods, and performance metrics. Boards should verify that this documentation exists, and that internal audit or compliance teams have reviewed it for accuracy. The documentation must be easily retrievable in the event of a regulatory inquiry.

Internal Reporting and Monitoring Channels

The Act also requires post-market monitoring for high-risk systems. Boards should instruct management to create a defined process for collecting and escalating incident reports, model updates, and audit findings. A simple reporting channel that flags anomalies or compliance breaches can prevent regulatory exposure and operational disruption.

By 2026, boards will be judged on the maturity of their governance and risk frameworks. A structure that links accountability, documentation, and oversight is not only a regulatory requirement but also a signal to investors and partners that the organization manages AI responsibly.

Vendor and Supply Chain Risks

AI compliance does not stop at the company’s internal systems. The EU AI Act extends responsibility to every organization that places, integrates, or uses AI supplied by third parties. This makes vendor oversight one of the most material board risks under the regulation. Boards should recognize that even a compliant internal program can fail if vendors or partners breach the law.

Third-party Exposure

Many organizations rely on general-purpose AI tools, data analytics vendors, or software providers that embed AI functions into their products. Each supplier relationship carries compliance implications. If a vendor’s AI system is later deemed high-risk or non-compliant, liability can flow to the contracting entity. Boards should require management to maintain an updated vendor register that lists AI-related products, their risk classification, and any associated attestations of conformity.

Contractual Protections

Contracts with vendors should include clauses that address EU AI Act compliance. These clauses must require providers to maintain documentation, notify clients of regulatory updates, and submit to audits where needed. The inclusion of indemnity provisions for non-compliance can protect the organization from downstream financial penalties or product restrictions.

Oversight and Audit

Procurement and compliance teams should implement a vendor review cycle that aligns with the Act’s deadlines. This review should check whether vendors have completed conformity assessments, maintain technical documentation, and can demonstrate traceability in their AI lifecycle. Boards should ask for a summary of these findings at least twice a year and verify that non-compliant vendors are being remediated or replaced.

Questions for Directors to Ask:

- Which vendors currently provide AI-enabled systems, and what is their risk classification?

- Are contractual warranties in place confirming compliance with European Union AI regulation?

- How does management verify vendor claims and monitor ongoing adherence?

- What is the escalation process if a vendor fails to meet regulatory standards?

The strength of an organization’s AI compliance is only as strong as its weakest supplier. By treating vendor oversight as a core board responsibility, directors demonstrate a mature approach to risk management and reinforce their organization’s standing with regulators and investors alike.

Financial, Legal, and Reputational Consequences of Missing Deadlines

Non-compliance with the EU AI Act exposes organizations to financial, legal, and reputational risks that extend far beyond regulatory review. Its penalty framework mirrors the severity of the General Data Protection Regulation, placing AI oversight firmly within the board’s scope of accountability.

Potential Penalties and Enforcement Actions

Missing EU AI Act deadlines can lead to fines of up to €5 million or 7% of global annual turnover, whichever is higher. These penalties apply to both AI providers and users. Regulators may also suspend operations or block market access within the European Union, disrupting business continuity. Beyond direct fines, non-compliance can trigger investigations across jurisdictions, increasing audit exposure and legal costs. Boards must insist that evidence of compliance is documented, reviewed, and readily available for inspection.

Impact on Investor Trust, Customers, and Regulators

Public enforcement or audit findings can quickly undermine confidence in leadership credibility. Investors interpret compliance failures as governance weakness, while customers may question the integrity of AI-driven products. Regulators tend to intensify oversight once a company shows signs of internal control gaps, increasing operational pressure. Maintaining transparent communication and timely disclosure reinforces trust across all stakeholder groups.

Why Boards Cannot Afford Late Action

Delays shorten preparation time, reduce negotiation leverage with vendors, and heighten the likelihood of rushed compliance errors. Once enforcement begins, corrective measures cost significantly more than preventive action. Boards that prioritize timely execution safeguard enterprise value, maintain confidence among regulators and investors, and demonstrate command of their fiduciary responsibilities.

How Boards Should Track Progress

Ongoing compliance with the EU AI Act requires structured monitoring. Boards should treat AI compliance as a standing agenda item rather than a one-time certification exercise. Regular reporting and clear accountability are the mechanisms that keep organizations aligned with regulatory expectations.

Establishing Measurable Checkpoints

Every board cycle should include a brief update on progress against EU AI Act deadlines. Management should present status reports showing completed actions, pending requirements, and key risks. These updates must include dates, responsible owners, and documentary evidence of completion.

Using Dashboards and Indicators

A concise compliance dashboard helps directors view progress at a glance. The dashboard should track:

- Status of AI system classification and risk assessments.

- Percentage of vendor attestations received and reviewed.

- Completion of technical documentation for high-risk AI systems.

- Training sessions delivered to staff and leadership.

- Upcoming regulatory milestones within the next 12 months.

Embedding into Governance Cycles

Boards should incorporate AI compliance into the same rhythm as financial, audit, and risk reporting. They should assign responsibility for ongoing monitoring to a specific committee or officer, with the expectation that updates will appear quarterly. By aligning tracking mechanisms with existing governance routines, the organization builds lasting discipline rather than ad-hoc compliance.

Conclusion

The EU AI Act has redefined accountability at the board level. Every deadline now represents a governance test: has the board assigned ownership, received evidence, and acted on time? Compliance is no longer an operational detail but a visible reflection of leadership integrity and preparedness. Boards that act early demonstrate foresight, protect enterprise value, and reinforce trust with regulators, investors, and clients.

AI oversight is now a standing obligation, not a passing concern. The organizations that institutionalize this discipline will be viewed as credible, compliant, and well-governed in the years ahead.

As regulatory expectations evolve, the right board composition is critical. Partner with Vantedge Search to design your AI-ready talent strategy, aligning executive search with coaching to support enterprise oversight and resilience.

FAQs

The AI Act went into effect on August 1, 2024. Prohibitions on unacceptable AI practices and AI-literacy requirements became effective February 2, 2025, while governance obligations for general-purpose AI (GPAI) took effect August 2, 2025. Compliance for high-risk AI systems will phase in, with most obligations applying by August 2, 2026, and extended deadlines, such as for embedded systems, until August 2, 2027.

Boards must verify that management has identified all AI systems, classified them by risk level, and assigned accountability. They should demand documented governance structures, vendor compliance evidence, and oversight workflows. Minutes and board reporting need to reflect active review of gaps and timelines. Boards must also ensure sufficient resources, escalation paths, and periodic review are in place.

High-risk AI systems are those affecting health, safety, fundamental rights, or critical functions as defined in Annex III. Compliance includes maintaining technical documentation, performing conformity assessments, implementing post-market monitoring, human oversight, robustness and transparency measures.

Non-compliance with prohibited AI practices may incur fines up to €35 million or 7% of global annual turnover, whichever is greater. Other breaches (e.g. of documentation or obligations) can attract penalties up to €15 million or 3% of turnover. Regulators may also suspend AI operations or restrict market access.

Boards should make AI compliance a recurring agenda item with status updates showing completed, in-progress, and upcoming tasks. They should request a simple dashboard tracking classification status, vendor attestations, documentation progress, and imminent deadlines. Aligning that report with the enterprise risk framework and audit cycles ensures accountability.

Leave a Reply