Regulated AI in Insurance: A C-Suite Guide to Automation with Oversight

Table of Content

- Understanding the Regulatory Landscape

- Strategic Applications of AI in Insurance and Where Oversight Must Intervene

- What Good AI Governance Looks Like

- Detecting Bias Before the Regulator Does

- The C-Suite Playbook: Leading with Foresight and Oversight

- Conclusion: Automation with Accountability is the Only Way Forward

- FAQ’s

- Insurers are accelerating automation across claims, underwriting, and fraud detection. But without executive oversight, AI can introduce hidden liabilities from bias and drift to regulatory fines and reputational damage.

- This blog takes you through how C-suite leaders can lead AI adoption with clarity by embedding explainability, audit trails, and model accountability directly into their operations.

- From board-reviewed governance frameworks to role-specific controls for CEOs, CIOs, CROs, and CDOs, you’ll learn how to operationalize artificial intelligence governance in ways that regulators respect and maintain the customer’s trust.

- We’ll also cover how to detect bias in AI insurance decisions, why human-in-the-loop systems are essential, and how to pre-empt risk before it escalates into litigation or regulatory action.

- Read further to make AI a competitive advantage without compromising oversight.

AI in insurance is no longer a future consideration it’s a current business imperative. From claims triage to fraud analysis and customer service, artificial intelligence is embedded across the insurance value chain. Industry is not just witnessing transformation; it’s automating it.

According to Conning’s 2025 report, 90% of U.S. insurers are exploring generative AI solutions, with 55% already in deployment. But as insurers race to automate, the real risk isn’t in what the AI can do, it’s how it’s governed. The moment demands more than acceleration. It demands accountability.

This is where artificial intelligence governance moves from technical formality to C-suite priority. Today, adopting AI in insurance without a strategic governance model is equivalent to underwriting without risk assessment. It invites operational blind spots, regulatory backlash, and reputational damage.

And yet, too many organizations treat AI as a back-office tool managed by IT or operations. That siloed view is dangerously outdated. The responsibility of overseeing algorithmic decisions, especially those affecting policyholders, now rests squarely on executive leadership.

This blog is a strategic lens for CEOs, CROs, CIOs, and Boards navigating the intersection of innovation, compliance, and risk. Keep reading to learn more.

Understanding the Regulatory Landscape

The regulatory expectations for AI in insurance are no longer open to interpretation. They are written, active, and expanding fast leaving little room for guesswork at the executive level. The days of treating AI oversight as a backend task are over. Now, it sits squarely on the Board agenda.

In 2024, the National Association of Insurance Commissioners (NAIC) released its updated Model Bulletin on the use of artificial intelligence in insurance operations. As of mid-2025, over 24 U.S. states have adopted this framework. It includes clear directives:

- Every insurer must define its AI use cases in writing

- Document model risks

- Maintain explainability standards

- Conduct bias audits

- Assign C-suite ownership

Some states have raised the bar even higher. Colorado now requires insurers to report how algorithms influence premium pricing and denials. New York enforces transparency over underwriting models using inferred attributes. Connecticut mandates internal audit mechanisms for AI-driven claims decisions. In California, regulators have begun cross-checking bias testing documentation against actual model behavior during random inspections.

Executives who sign off on these systems are not shielded from scrutiny. If an algorithm wrongly denies coverage or discriminates based on ZIP code proxies, the compliance lapse points upward to the C-suite, not just the vendor or the data science team.

According to the NAIC’s 2025 Insurance Technology Adoption Survey, 84% of health insurers now use AI. Of those, 92% have formal internal AI governance frameworks. These aren’t basic checklists, they include lineage logs, access controls, role-based accountability, and real-time bias monitoring protocols.

That’s the new bar.

Artificial intelligence governance is now expected to function like financial governance: periodic audits, version-controlled documentation, board-reviewed controls, and executive accountability. It is no longer enough to say you “use AI responsibly.” You must show how it was evaluated, monitored, and corrected.

AI in insurance carries risk far beyond technical failure. Regulatory penalties, lawsuits, and lost market confidence are all on the line when governance falls short.

Leadership is expected to know not just where AI is used, but how its decisions are being made and who is accountable for its outcomes.

Strategic Applications of AI in Insurance and Where Oversight Must Intervene

AI in insurance is powering core workflows at scale from claims triage to fraud detection and underwriting. These systems deliver speed and accuracy gains, but they also shift risk. Where risk grows, oversight must rise too.

Claims triage: By 2025, carriers such as Zurich have automated nearly half of routine claim assessments, reducing processing time by around 50% and improving customer satisfaction by 25%. Yet efficiency isn’t the only metric. If these systems prioritize low-value claims based on ZIP codes serving efficiency at the cost of fairness, they expose firms to discrimination and customer backlash.

(Source: International Journal for Multidisciplinary Research)

Underwriting automation: AI-driven platforms now process up to 85% faster, according to Indico Data’s 2024 study. That speed enables near real-time decisions on standard policies. But speed without oversight is simply a fast failure. Each denial or price quote generated by AI demands documented traceability and a human review checkpoint before execution.

Fraud detection: As per a Deloitte report, Machine learning tools flag 20–40% of soft fraud and up to 80% of hard fraud cases such as duplicate claims or inflated losses. These systems can recoup claims or deter fraud, but if trained on biased data, they may disproportionately scrutinize certain demographics. The result? Customer frustration, reputational harm, or regulatory inquiries.

For every high-value case of AI in insurance, the C-suite must enforce:

- Human override triggers – Define thresholds that require manual review and document them.

- Bias detection checkpoints – Regularly test models for proxy discrimination (e.g., based on ZIP, income).

- Audit-grade logging – Preserve full decision trails for every AI outcome, including timestamps, input variables, and reviewer notes.

Without these protocols, automation becomes a black box. And once an opaque system misfires, uncovering the failure and containing the fallout becomes far harder.

The C-suite’s role is clear: no AI deployment in claims, underwriting, or fraud detection should proceed without embedded oversight. Performance gains must come with guardrails because ungoverned efficiency can erode trust and invite severe consequences.

Want to Build a C-suite-level AI Governance?

What Good AI Governance Looks Like

AI in insurance cannot operate on blind trust. Models must be understood, monitored, and challenged regularly. That’s the role of artificial intelligence governance not as a compliance hurdle, but as a leadership tool that builds resilience, credibility, and regulatory alignment.

- Explainability is the foundation. If an underwriter or claims adjuster can’t explain how a model reached a decision, neither can a regulator or a court. Explainable AI (XAI) ensures that outputs are traceable, and logic can be audited in plain English. It also helps insurers defend decisions when challenged by policyholders or regulators. Without it, decisions remain legally vulnerable.

- Model risk management goes further. AI models must be tested for bias, concept drift, and degradation. Drift occurs when patterns shift often silently leading to flawed decisions. Degradation reduces performance over time. Without monitoring, both risks go unnoticed until the damage is done. Every high-impact model must have a testing schedule, with performance metrics tied to real business and regulatory thresholds.

- Human-in-the-loop design remains critical. Despite automation, insurers are expected to maintain human accountability at key decision points, especially in areas affecting policy eligibility or claims denial. In 2025, the U.S. Department of the Treasury reiterated that “algorithmic decision-making must always preserve a human right to appeal,” particularly in financial and healthcare-related domains.

Finally, the government doesn’t stop internal controls. Third-party audits, board-level AI policy reviews, and regular bias detection logs are becoming best practices. According to aggregated market research, the global AI market in claims processing is projected to reach $35.76 billion in 2029 at a compound annual growth rate (CAGR) of 36.6%, driven by advancements in algorithms, insurtech expansion, and rising demand for explainable, ethical AI.

The C-suite must ask:

- Are our AI decisions explainable and auditable today?

- Are we detecting model drift or degradation before it affects customers?

- Can we prove human oversight across all sensitive workflows?

While AI in insurance adds speed and efficiency, without the right governance, it becomes a black box with regulatory consequences. Leaders who treat artificial intelligence governance as a proactive, strategic function, not just a control, will be the ones positioned to scale with trust.

Detecting Bias Before the Regulator Does

AI in insurance has introduced a new kind of risk silent, statistical, and deeply consequential: algorithmic bias. These models don’t need to ask for race or gender explicitly to discriminate. They infer. And that inference can create systemic unfairness that triggers regulatory audits, litigation, and public fallout.

Proxy discrimination is at the center of this issue. AI models often use ZIP codes, income levels, marital status, and even education as predictive features. While technically legal, these attributes often correlate with protected classes leading to outcomes that disproportionately impact certain populations. That’s where compliance risk turns into reputational risk.

This is where bias detection in AI insurance moves from best practice to business imperative. Models must be tested for disparate impact across race, gender, geography, and other sensitive variables regardless of whether those variables are explicitly used. Testing must go beyond training data. It must include production-level monitoring and real-world feedback loops.

Artificial intelligence governance requires codified remediation plans. When bias is detected, insurers must have a path to pause, correct, and relaunch models. Regulators now expect full version control and detailed records of every correction made. Documentation is no longer a legal cushion; it is a survival requirement.

CROs and Chief Data Officers must own this domain. Governance protocols must define:

- Which AI outputs are tested for bias?

- At what thresholds is intervention triggered?

- How are external vendors held accountable for model performance?

If a carrier cannot answer these questions before a regulator asks, it is already behind schedule.

As one insurance regulatory counsel put it in a recent industry briefing: “AI doesn’t need to ask about race to discriminate. That’s where C-suite oversight begins.”

Leadership must understand this: undetected bias is not a data science failure it’s an executive failure. AI in insurance must be fair by design, not by assumption. And fairness can’t be validated once. It must be continuously proven.

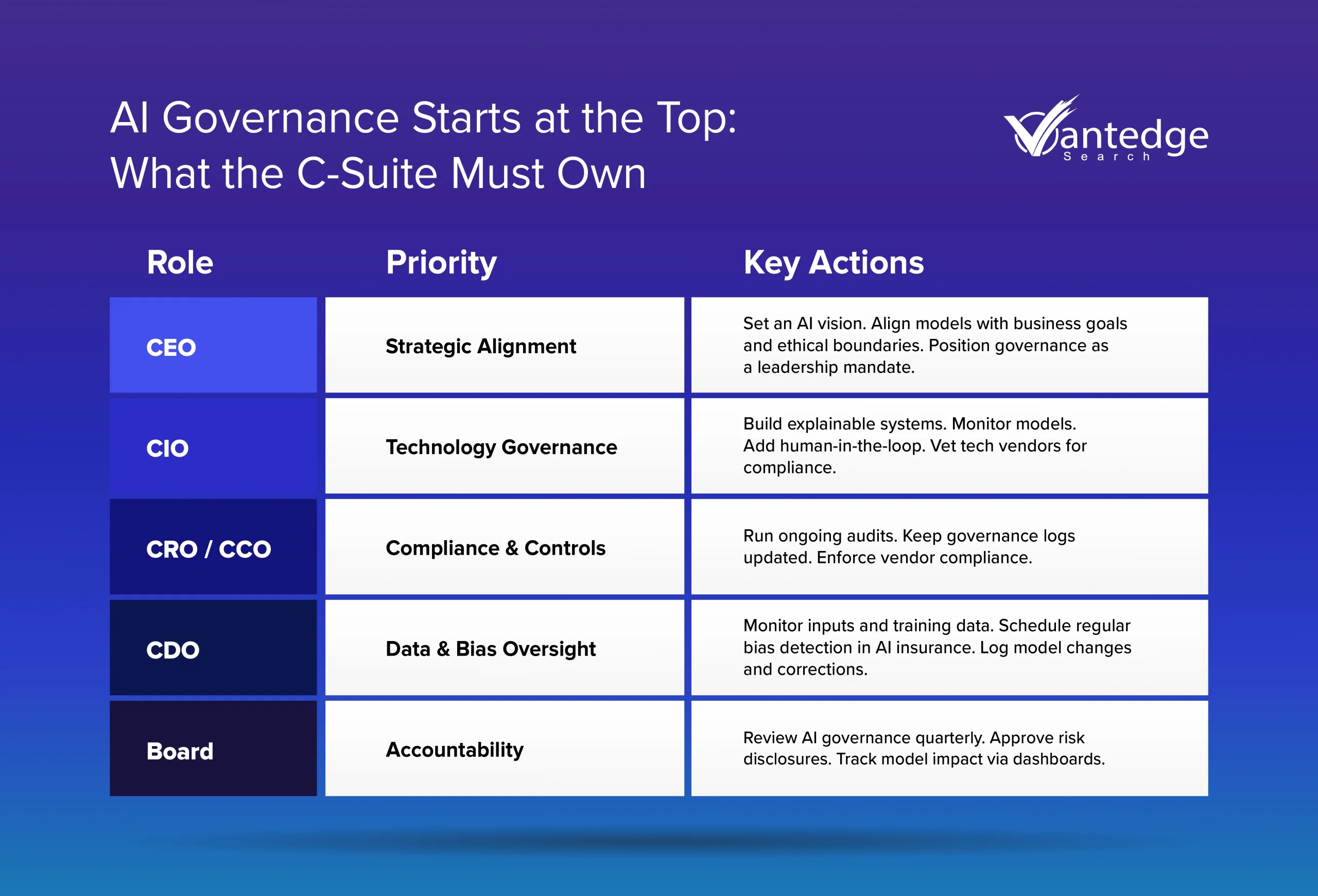

The C-Suite Playbook: Leading with Foresight and Oversight

Governance of AI in insurance cannot be siloed under compliance or delegated to IT. It demands C-level ownership mapped to real-world responsibilities, not abstract principles. Every executive has a distinct role to play in building AI systems that perform, comply, and protect.

This isn’t about overregulation. It’s about operational clarity. Below is a clear framework to help leadership teams operationalize artificial intelligence governance across roles.

This framework allows insurers to move from passive compliance to active oversight. It ensures every dimension of AI in insurance is matched with human accountability backed by real-time controls and executive documentation.

It also creates a clear internal map of liability. If an AI-driven denial or pricing error occurs, the firm should be able to show exactly:

- Who signed off on the model

- What governance reviews were done

- When bias testing occurred

- How the escalation process works

Insurers with these answers will not only survive audits, but they’ll also outperform competitors trying to fix governance after headlines.

Treating artificial intelligence governance as a boardroom discipline reduces legal exposure and increases system resilience. But more importantly, it sends a message across the organization: AI is not a black box. It’s a system of human decisions structured and owned at the top.

Conclusion: Automation with Accountability is the Only Way Forward

AI in insurance has moved from optional to operational. It drives decisions in underwriting, claims, and fraud. But without governance, even well-built systems create silent risks that are regulatory, legal, and reputational.

Leadership can’t treat AI oversight as a side task. Every model that impacts customers must be explainable, tested for bias, and owned by someone at the top. That’s not optional anymore, it’s the regulatory baseline.

Artificial intelligence governance does not slow progress, but rather gives a clear framework to scale AI responsibly with clear controls, logged decisions, and documented accountability.

Without clear AI governance, trust erodes quietly, long before regulators or auditors sound the alarm. C-suite leaders that delay oversight expose the business to investigations and class actions. Those that act now will lead with confidence and build trust faster.

Consult our search experts to find your AI innovation-focused leader in insurance.

FAQs

AI in insurance uses machine learning and automation to speed up underwriting, claims, fraud detection, and customer service, reducing cycle time and improving accuracy.

Because AI decisions impact pricing, claims, and customer experience. C-suite oversight ensures these models are fair, explainable, and compliant, not just efficient.

It’s the set of policies, audits, and controls that ensure AI is used responsibly in insurance. It includes documentation, bias testing, and clear accountability at the executive level.

Bias detection in AI insurance means testing for unfair outcomes using variables like ZIP code or income. Regular audits and human reviews help prevent discriminatory decisions.

AI liability insurance protects insurers from losses caused by flawed AI decisions covering legal claims, accuracy failures, and reputational harm.

Leave a Reply